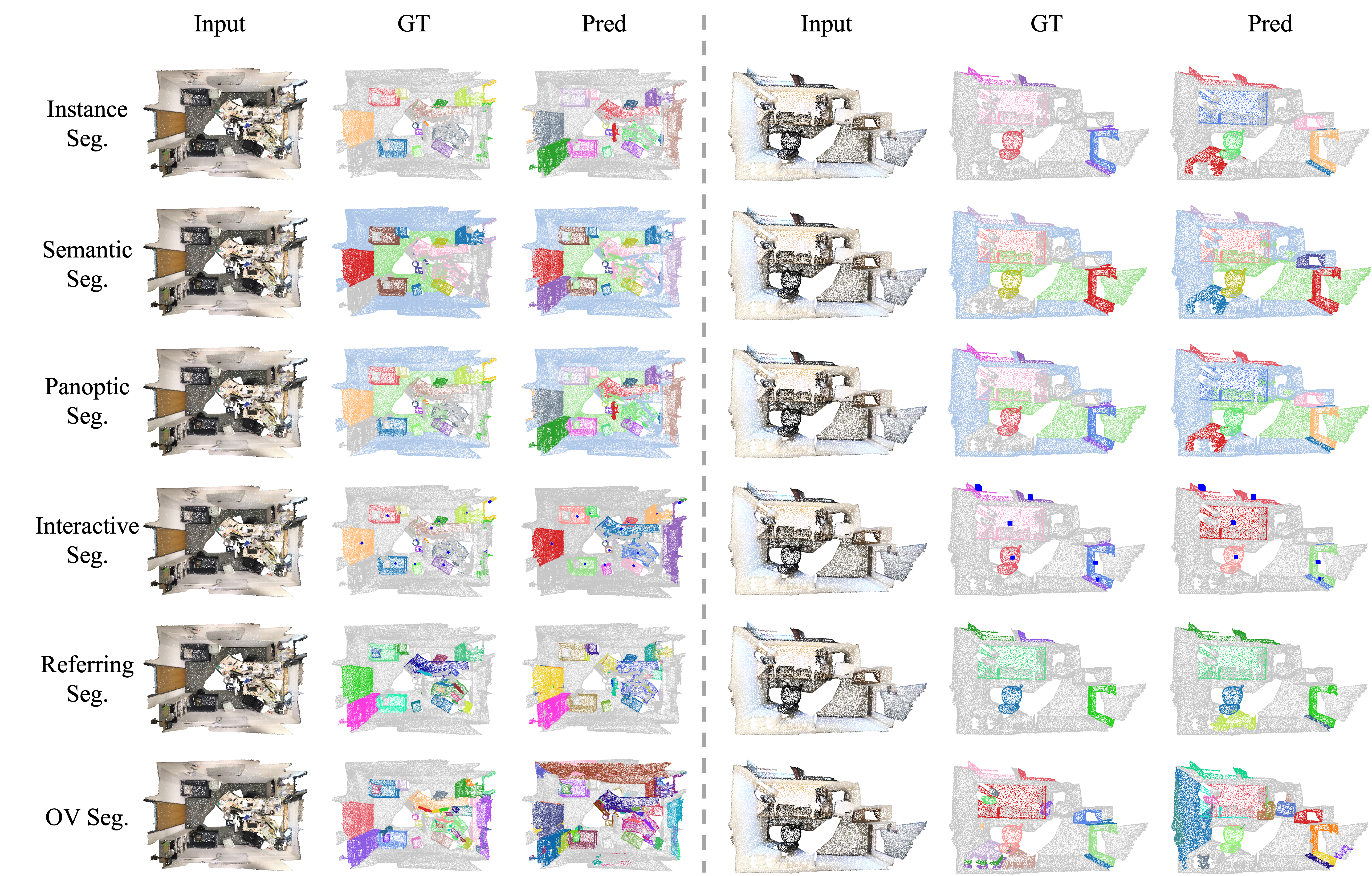

Overview

Unified Tasks

Instance Segmentation

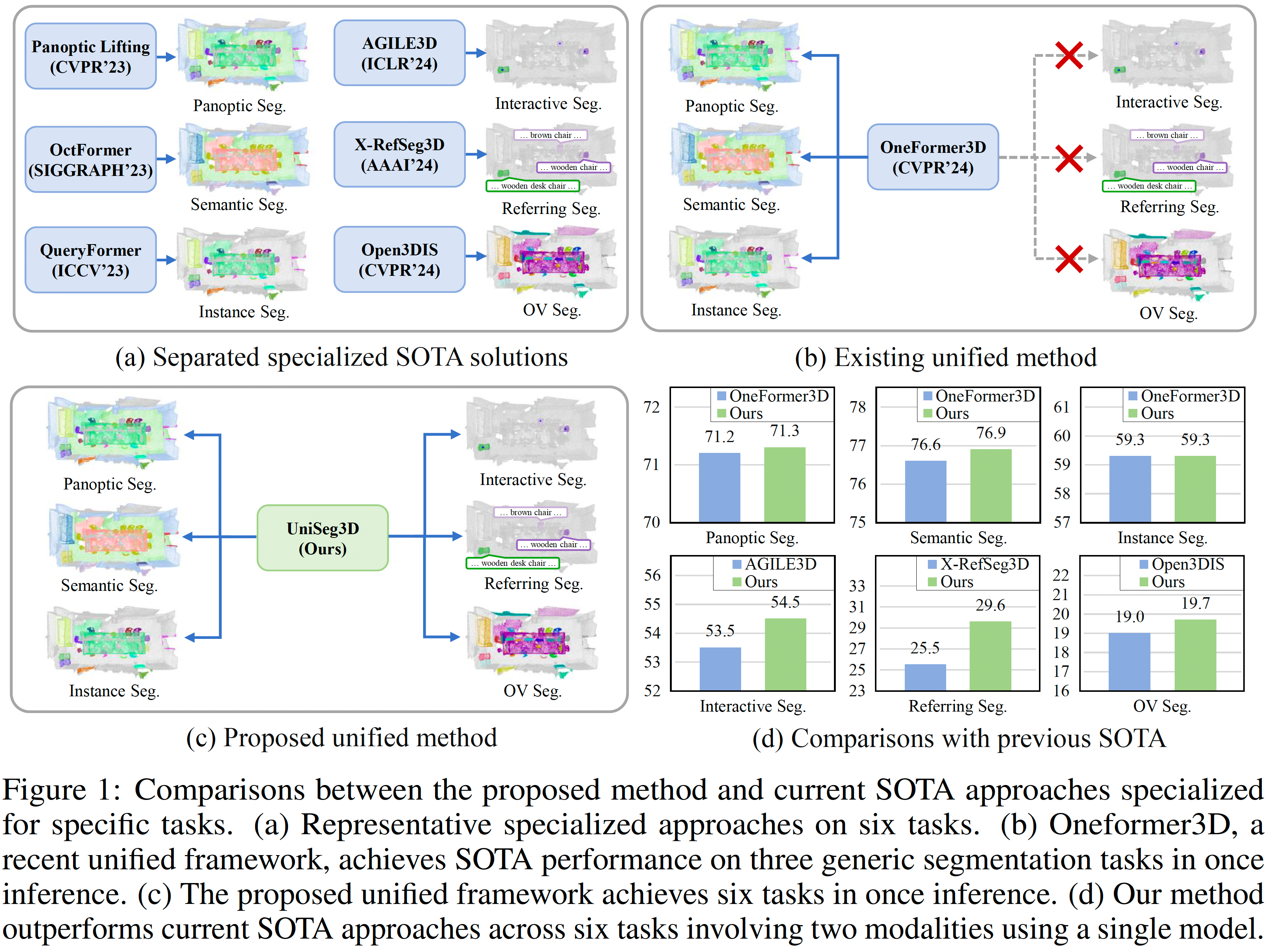

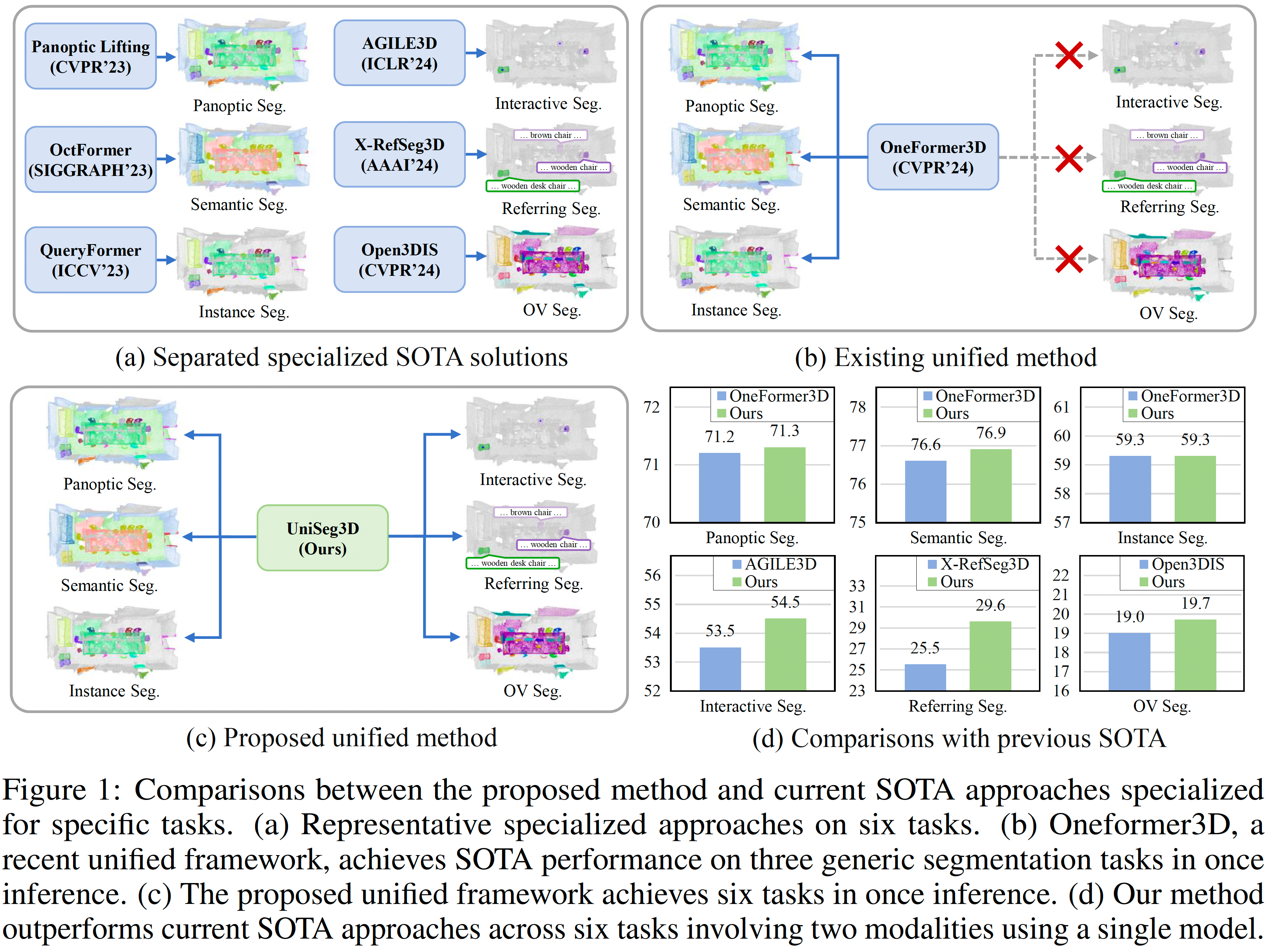

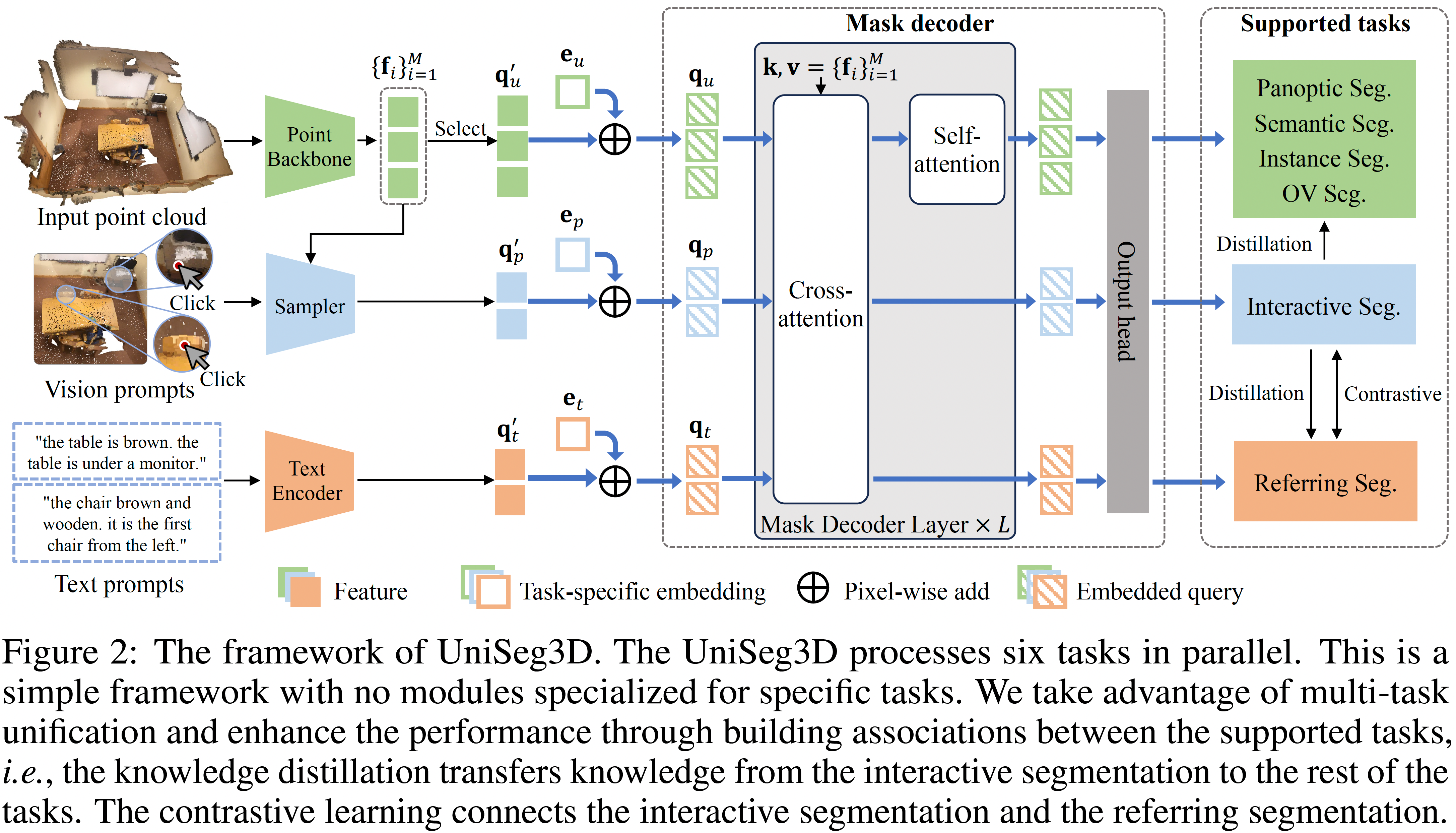

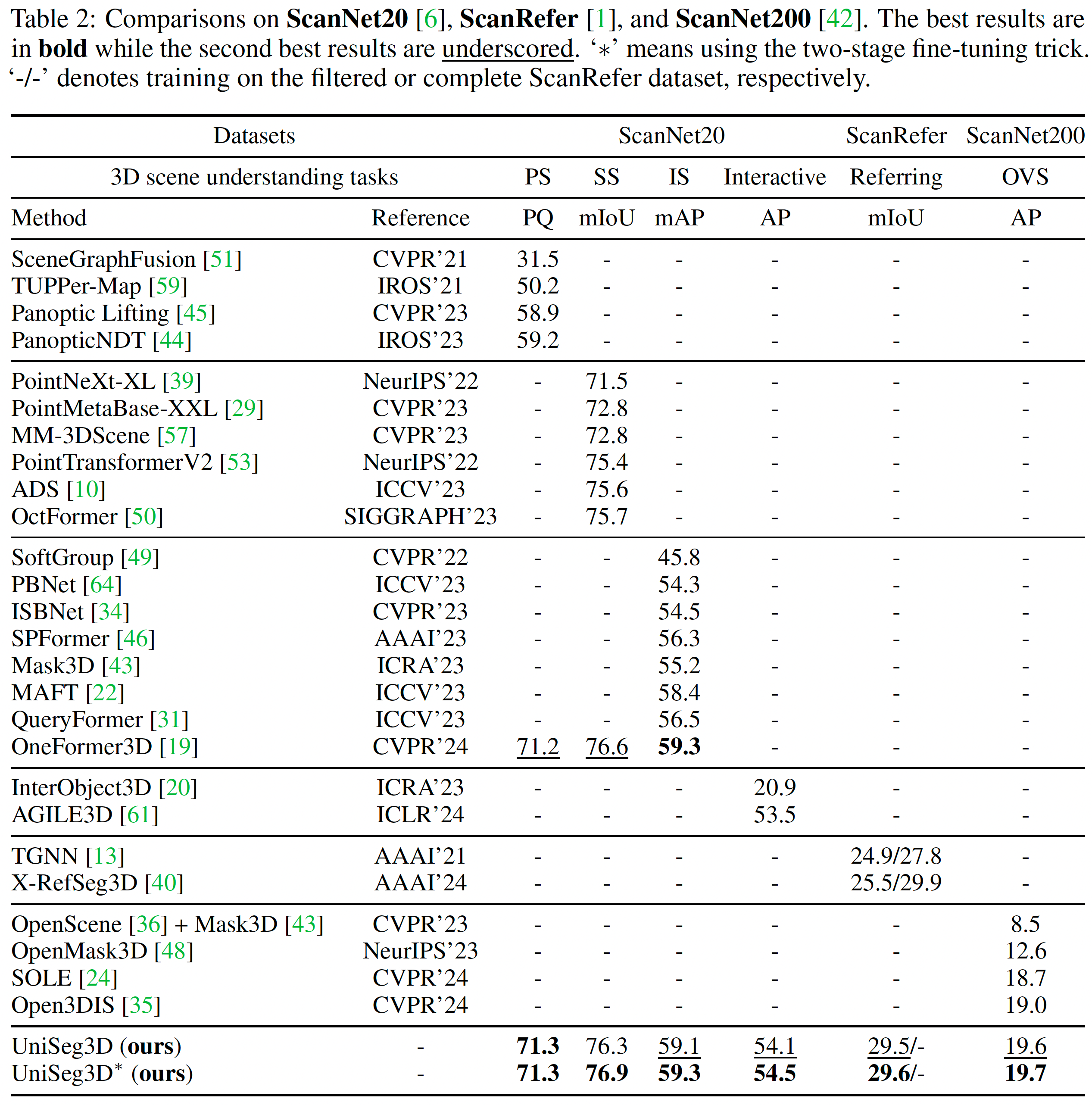

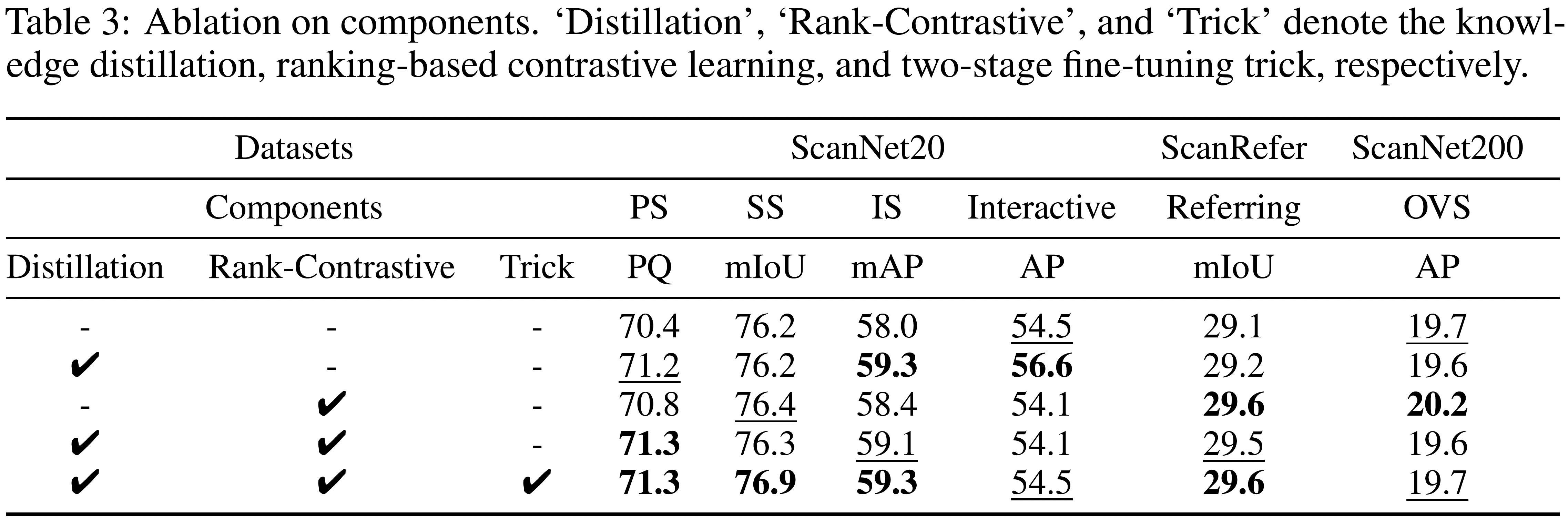

We propose UniSeg3D, a unified 3D scene understanding framework that achieves panoptic, semantic, instance, interactive, referring, and open-vocabulary segmentation tasks within a single model. Most previous 3D segmentation approaches are typically tailored to a specific task, limiting their understanding of 3D scenes to a task-specific perspective. In contrast, the proposed method unifies six tasks into unified representations processed by the same Transformer. It facilitates inter-task knowledge sharing, thereby promoting comprehensive 3D scene understanding. To take advantage of multi-task unification, we enhance performance by establishing explicit inter-task associations. Specifically, we design knowledge distillation and contrastive learning methods to transfer task-specific knowledge across different tasks. Experiments on three benchmarks, including ScanNet20, ScanRefer, and ScanNet200, demonstrate that the UniSeg3D consistently outperforms current SOTA methods, even those specialized for individual tasks. We hope UniSeg3D can serve as a solid unified baseline and inspire future work.

@inproceedings{xu2024unified,

title={A Unified Framework for 3D Scene Understanding},

author={Xu, Wei and Shi, Chunsheng and Tu, Sifan and Zhou, Xin and Liang, Dingkang and Bai, Xiang},

booktitle={Advances in Neural Information Processing Systems},

year={2024}

}